Dichotomy between ‘describing’ and ‘predicting’ with data-science algorithms

There are two primary goals when applying an algorithm; we approach the data with a goal of describing the data as they are, or using the data to extrapolate about future likelihoods. Often we can combine the too too, but these can be exclusive applications.

There are several different classes of algorithms that are typically applied:

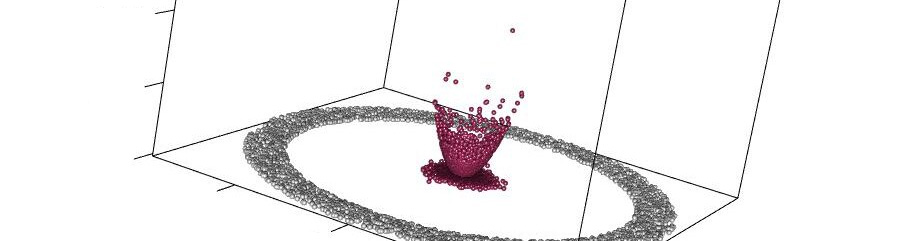

Clustering: this is a descriptive process, we try to assign the data to one or more categories based upon their relative proximity to each other. Often this feeds into other modelling algorithms allowing us to reduce the total number of instances/rows within a dataset by letting us characterise them in relation to a particular, or known, instance. The goal is to identify the class to which particular instances belong and so it requires (to varying degrees, depending on the particular form of algorithm) that the data can be analysed with a framework that asserts that there are discrete groups. Clustering algorithms are however descriptive tools, they identify similarities, and therefore are not used to determine likely future outcomes

Prediction*: This too is a descriptive tools, but is also a tool which may have predictive power. These algorithms consider the relationships between various continuous, numeric variables.

*I’m going to need a better name for this if I am to recall this correctly, I suspect that this is really looking towards linear regressions and Geraldine is a little scared about spooking the horses in the short run by using technical terms – will update

Classification: Classification algorithms attempt to identify sets of rules which can be used to differentiate between discrete classes of labeled data. The model that is developed attempts to predict the likelihood that a particular instance has a particular value in a defined variable. This can then be used to assign previously unclassified rows to their most likely class. Again this can be used to both describe the existing data, but also the model can be used to predict other non-labelled cases – suspect we’re entering the world of logit regressions etc. here, will update

Dependency analysis: The goal is to predict the likely rate of correlation between discrete entities within a subset based on the presence of another entity. There are two sets of rules that might be developed: Association Analysis – where these elements are co-occurring at the same time, and

Sequential Analysis – where there is a sequential temporal dependency between these items. These analyses can be both descriptive, and/or predictive

Deviation Detection: Identifying whether events are within the range of expected outcomes. The key intuition here is that the new event is outside of what is expected of a member of a given class of identities.