Clustering Algorithms Overview

Less precise than other forms of modelling (specifically classification). The development of useful information from the data and the identification of a particular cluster often requires significant domain expertise.

It is a form of unstructured learning as the algorithm defines the clusters, and groups the instances accordingly. The aim of the application of a clustering algorithm it to identify groups of rows that are both similar to each other, and are also dissimilar rows that are in other groups.

They map out the relative distance between different sets of instances that are ‘closer’ to each other.

There are two key forms of ‘distance’ in this context, the within group similarity: intra-group distance, and the between group dissimilarity: inter-group distance.

They can be used as summarisation tools: e.g. reducing the computational intensity that is involved in training a neural network analysis, by training it on a reduced selection of rows, or based on cluster specific prototypes

It is always wise to use a variety of clustering tools when analysing data. They are are for fishing expeditions where we don’t know what is in the lake. The risk is that in only using a particular algorithm we might, say identify three groups within a k-Means analysis because that is what we have specified, but may miss out on distinct subgroups within those groups that could be identified through examining a dendrograph.

Different Forms of Clusters:

Well separated clusters – instances that are part of each cluster are closer to the other elements in that cluster than they are to the elements of other clusters

Centre-Based Clusters – instances are closer to the centrepoint of a particular cluster than than they are to the centrepoint of any other cluster

Contiguous Clusters – instances are closer to another member of the same cluster than they are to a member of any other cluster

Density Based Clusters – Clusters are identified after the use of noise reducing algorithms

Proprietary/Pre-specified clusters: Instances are sorted according to some pre-specified category based on domain expertise

Categories of Clustering Algorithms:

Partitioning Methods: The number of clusters are specified in advance. Such methods are examples of complete clustering where each row is allocated to exactly one cluster, these classifications are both exhaustive and non-overlapping (e.g. k-Means). Best used where data are concave, normalised, and have Gaussian distributions.

Hierarchical/Agglomerative Clustering: Rows are sorted into tree structures. Branches break from parent rows at optimal points which identifies the relationship between and so the relative proximity of distinct groups from each other. These methods are another form of complete clustering as at every stage of the hierarchy each row is a member of one cluster, and is only a member of at most one cluster.

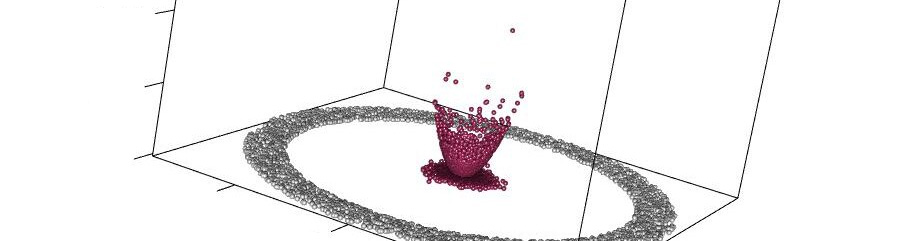

Density Based: These methods are used where there are dense areas of rows which are separated by areas of lower density. The sparsely populated areas are screened out or de-weighted, therefore not all rows are assigned to a cluster. This results in partial clustering, though where rows are assigned to a cluster, they are only assigned to a single cluster non-overlapping (e.g. DBScan).

Model-Based Clustering: The distribution of clusters is parameterised, i.e. it is assumed that they follow particular distributions – such as normal distribution – and given that, population statistics e.g. mean, variance, are estimated, along with the probability that each row is a member of a particular cluster. As any row potentially has a likelihood of being part of any given class, and so these are a class of clustering algorithms which are overlapping, but may also be either partial or complete in their clustering.

Grid Based Methods: Organises the rows into clusters linearly – where rows that are furthest from each other are separates to the greatest degree – and those that fall into the middle are not assigned to a group (partial clustering). Useful for identifying outliers, but members of any cluster are only assigned to a single cluster, so this is an algorithm that is non-overlapping.

Fuzzy Clustering: Every row belongs to every cluster, with probability p*

p~[0,1], Σ (i=1… n, n= number of variables/attributes) = 1