Hierarchical Clustering

Hierarchical clustering groups data rows into trees of clusters, there are two main approaches, bottom up, and top down.

Aggolmerative hierarchical clustering: every row is assigned to its own cluster initially. Clusters that are closest to each other are then merged and the process iterates with fewer/larger clusters until all clusters have merged into a single cluster, or some terminating condition is met.

Divisive hierarchical clustering: is a top down approach which sees the data grouped into a single cluster initially, Clusters are broken down into sub-clusters until all rows are in their own cluster, or some other condition is met. Check with Geraldine about how the clusters are sub-divided, especially the first sub-division. The cluster that is to be split is the cluster with the largest SSE (sum of squared errors). The terminating condition can be the number of clusters required, or a minimum cluster size.

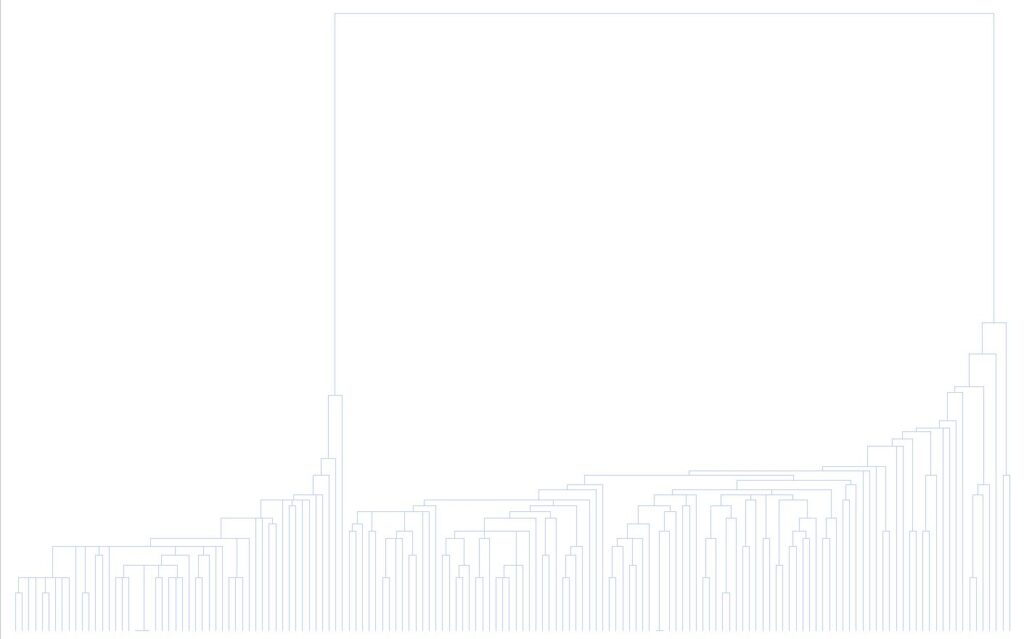

The output for a hierarchical clustering:

This is an example of agglomerative clustering dendrogram, using the ‘Irish’ data set.

The ‘top-down’ divisive approach seems to need a cluster model to work from.

Merge/Split points:

The decision to merge/split clusters is sharply defined by the way in which we compare/assess them. tThere are several methods, each of which can lead to very different associative outcomes.

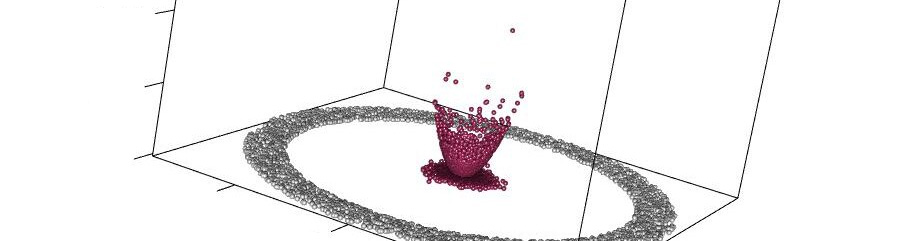

Min/single linkage: The distance between two clusters is the distance between the row in cluster A that is closest to a row in cluster B, and that row in cluster B. This method can work well with concave clusters, but is vulnerable to the effects of outliers

Max/complete linkage: The distance between the two least similar rows in each of the clusters is used to determine the distance between the two clusters. This method works better with compact concave clusters. Larger clusters tend to be divided, and density can impact how clusters are merged, but as Geraldine, has noted, this is contested within the literature.

Average/average linkage: The distance between all points in each cluster is considered pairwise, and the average of all these links is calculated. This form of clustering is biased towards convex, globular, clusters and is robust to noise/outliers.

Mean Distance/Centroid linkage: The ideal/centroid row is calculated based on the means of the other rows. Again biased towards convex clusters, but robust to noise/outliers. Also, less computationally intensive than average linkage.

Wards method: Calculates the Sum of Squared Errors (SSE) of the two clusters if they were to merge and become a single cluster. Proximity is calculated based on that score, i.e. the two clusters that have the lowest increase in SSE when they merge are the clusters that merge in any given iteration. Again, works best with convex clusters, and is insensitive to noise/outliers.

Strengths/Weaknesses:

Good clustering, also shows the relatedness of different clusters, however hierarchical clusters are computationally intensive and cannot roll back should there be an error in how they split/merge clusters. Issues can arise where data is noisy, or in high dimensions.