Critique of “Philosophy and the practice of Bayesian statistics”

In this piece I use Gelman and Shalizi’s (subsequently GS) “Philosophy and the practice of Bayesian statistics” (2012) to look at the difficulties involved in the interpretation of statistics, what it means for the falsity and falsification of statistical models. I develop upon the consequences of the authors insight regarding the dynamics of failure in statistical models and how this may impact upon the insights available to both the philosophically minded statistician, and those who are less so.

Overview of GS

In their article GS make an attempt at firming up the philosophical foundations of Bayesian statistics. The task GS have taken on is to make distinct what we do, when we do those things that we call Bayesian statistics, (which are often very far removed from what we think we do when we do those things). The focus of their attack is what they call Bayesian inference, which the authors hold has led to fundamental errors in the application of Bayesian statistics. GS promote an alternate paradigm for the use of Bayesian statistics and support their claims with both theoretical arguments, and the practical research experiences of the authors.

Through this paper the authors aim to reframe Bayesian inference as hypothetico-deductionism in opposition to inductive inference, to this end they seek to establish the actual role of prior distributions in Bayesian models, and also note how crucial aspects of model checking and model revision are not contained within Bayesian Confirmatory Theory. GS present the current theoretical landscape of statistical inference as being binary, involving either falsification through Fisher’s ‘p’ (with null hypotheses and confidence intervals etc. included) or an inverse probability Bayesian methodology that slowly accretes new knowledge through inductive methods. Together GS attempt to create a third way that makes use of Bayesian methodologies in a falsification paradigm.

GS make strong claims about what Bayesian inference is, and how it is used. They discuss what they call “the usual story” of Bayesian inference the received view of which is, in the authors’ view, “wrong”. The authors posit a view of Bayesian statistics where the researcher calculates the probability of many alternative possibilities, given the data which has been discovered, and perhaps calculates the alternative possibilities under many different models, then either selects the alternative with the highest possibility as the ‘truest’ alternative or averages out the probability over several different alternatives or models.

Perhaps the simplest critique of their paper is that they have created a straw man whom they have filled full of arrows. To an extent this is what Morley et al. (2013) have argued in their critique of GS. Morley et al. claim that there are two types of Bayesians, the humble, and the over-confident, and that GS’s Bayesian is the over-confident type. This type of Bayesian is an atypical case, and so is a trivial case with regard to how we should interrogate the appropriate application of Bayesian statistics. Morley et al. however go on to attack GS on the grounds that “The core of the[ir] view seems to be that model checking is not regulated by an inductive, but rather by a deductive mode of inference” (Morley et al. 2013). There are suggestions that there exists a degree of incommensurability between these opposing camps because while for GS all models are false, for Morley et al. the fact that no statistical model is falsifiable makes the GS position incoherent. While Morley et al. claim that GS lack “a theory of inference using model checking”, the sense from GS is that this is dealt with.

Probing parameters weaknesses

GS’s argument is that within a given model it is possible to deduce certain probabilities, though it is not possible to inductively infer the truth of the propositions of that model. The strategy which GS advocate is not one of establishing which, of many, models we ought to use (nor why it the model is true) but rather a consideration of the lack of robustness of the model i.e. the marginal propensity for probabilities of a given alternative to change given a relatively small change in the data. As every model [within the social sciences] is, according to the authors, merely a simplification or approximation of reality, and so every model is wrong. Hence, it is incumbent upon the researcher to know what the vulnerabilities of the models that she uses are. For GS, it is the process of establishing the ways in which our models fail that allows us to learn.

Article as manifesto

The ostensible targets of this article are “philosophers of hypothetico-deductive inference” and “philosophically minded Bayesian statisticians”, the authors hope that the former will look again at Bayesian data analysis while they hope that the latter will alter the interpretation that they place of the results garnered from their analysis. There is probably a third grouping that the authors are hoping to influence, the non-philosophically minded statisticians. In Section 3 the authors characterise the relationship of the statistician to their model as being akin to the Principle-Agent problem. While the statistician knows that there are limitations to the extent that the model which she has developed describes the data universe, the model – or the software which operates within the confines of the model – ‘believes’ (in the Bayesian context) that they model fully describes reality because no model is complete. There is no way of including every alternative of every possibility into a particular model, or even finite set of models. Where models are unfalsifiable if the researcher is not expert in the internal dynamics of the model she is incapable of assessing her results robustness.

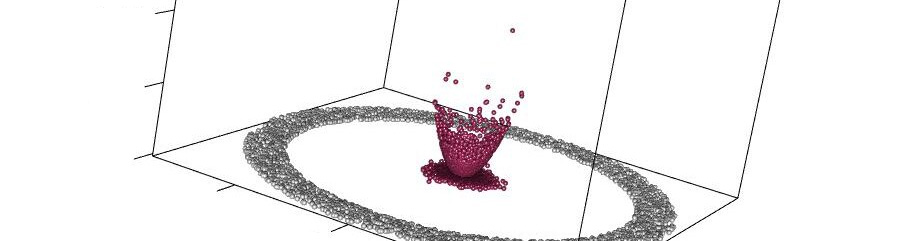

The concern is that the researcher will engage in that form of Bayesian updating where she runs alternative models to find that model which fits most closely the distribution of a given set of data. This likelihood hacking runs the risk of overfitting the data which has been used to optimise the selection of a particular model. Without a topographical understanding of the probability space mapped out by the model it is probable that the researcher will not be able to correctly accommodate the idiosyncrasies of her model.

“what Bayesian updating does when the model is false (i.e., in reality, always) is to try to concentrate the posterior on the best attainable approximations to the distribution of the data, ‘best’ being measured by likelihood. But depending on how the model is misspecified, and how θ represents the parameters of scientific interest, the impact of misspecification on inferring the latter can range from non-existent to profound. Since we are quite sure our models are wrong, we need to check whether the misspecification is so bad that inferences regarding the scientific parameters are in trouble. It is by this non-Bayesian checking of Bayesian models that we solve our principal–agent problem.”

The authors are specifically concerned about situations where there exist multiple equivalent maxima points within the probability space described by a particular set of parameters (or reachable given our limited capacities to measure), in such cases the reweighting of parameters will always produce different likelihood optimisations as the dataset increases (assuming at least some sampling noise in the data).

In these scenarios, or in other scenarios where the model is insufficient to usefully describe the data, it is necessary to reform the model if we are to improve upon it. In the big-data era (which seems to be the real target of much of this argument, the authors seem to suggest that no reader of this article would simply run models until they got a sufficiently useful result, but that others might) the problem is that the parameterisation of a model is exogenous to the Bayesian statistical process. Unless a parameter is present in a particular model it cannot be appropriately represented, but the decision as to whether or not it is appropriate for a parameter to be introduced is one which is determined by the researcher herself and not the model.

Thus it doesn’t matter how much machine learning you throw at a particular problem, unless all the necessary parameters are included by the principal the model remains incomplete. While the model may be capable of excluding particular parameters from the given set of parameters, and may (through analysis of regularities in error functions that ought to be randomly distributed) be able to establish that missing variables exist it can only expand the set of included parameters in a random fashion.

What can models teach us?

It is here that we discover the epistemological challenges of GS’s thesis, and I think that this is where Morley et al. were going with their concern regarding the lack of “a theory of inference using model checking”. While there is a certain degree of discussion about what a test for measuring the marginal sensitivity of the model ought to be capable of doing, the author’s preferred mechanism for establishing the characteristic failures of a particular model are by graphing the data. There is no systematic mechanism for doing so, the nature of the graph would have to be determined by the nature of the data. The choice of graph would necessarily highlight particular characteristics of the data, so a degree of judgement would be needed to select the appropriate formatting. Then the interpretation of these aspects of the data would have to occur, again something which can’t be objectively systematised, it would be a function of the model used, and the data it was possible to gather.

Having intuited the appropriate means of interrogating the data graphically it would then be necessary to observe a relationship which is not transparent in the data and introduce a new parameter/variable in to create a new model, then see how the posterior likelihoods were affected. The example that the authors use for this example is a study of voting patterns across the United States, initially the author believed that the likelihood that a particular set of people (poor/middle-income/rich) would vote for a republican was governed by a relatively simple model.

On the basis of the model failing to fit the data, the authors graphed out the data and established that the likelihood that rising income would increase the propensity for members of that population to vote republican was actually a variable which changed according to the wealth of that state. Thus the process of learning occurs, but is a consequence applying of external expertise. Knowledge arises from the ability to identify relationships in a way which is not possible to narrowly proceduralise.

Morely et al. do not take up or challenge this problem either, their solution to the limits of practical Bayesian statistics is what they call the open-minded [and humble] Bayesian, who recognises that models are incomplete and similarly intuits appropriate ways of extending the model-space.

The falsity of models

Where Morely et al. differ from GS is that they believe that models are neither true nor false, while GS are certain that they are false. Thus for GS there exists the possibility of eliciting more information from your system of analysis by tracing out the characteristics of the model which led to its failure to fit the data. By testing the model against new or simulated data it is possible to probe the internal dynamics of the model which ought to allow the researcher to ascertain whether her original results were robust or if not whether they were a false positive/false negative result. This probing of the probability space of the model ought, the authors hold, allow the researcher access to information she would not otherwise have had which should allow her to figure out what is missing from the model more easily.

Popper argues that we progress in science by narrowing the range of possibly explanatory theories through falsification. When we falsify a proposition we then know that that proposition is not true. There are a couple of problems with this that arise out of the mechanisms we have available to us for falsification. Any measurement we make is only valid within a defined degree of certainty, it is not possible to make a point like estimate of a variable. Secondly no procedure is beyond critique, there is the probability of procedural error. Thus we can never absolutely conclude that a proposition is false, only that it is false with a certain probability of being correct in that falsification. With technological and methodological improvements this probability approaches, but is asymptotic to, one. Consequently, the move that GS make is that if probabilistic modelling is never falsifiable then they ought to examine that domain where every model is false.

GS chose the social sciences as the domain for this argument because we use the models that we use, knowing that they are false. This seems to be their resolution to the difficulty of falsification in Popper.

The alternative view that GS discuss is the Khunian paradigm shift model of science. The difficulty encountered by this model is the same difficulty that Popper encounters. There is always a way of arguing that a given model essentially retains a certain explanatory force. The authors argue that Kuhn makes use of the Quine–Duhem thesis; that any given paradigm is reliant upon ‘auxiliary’ hypotheses, and that facts which are used to defeat the primary paradigm can be refuted by recourse to the same measurement cum methodological problems that so undermine the strict Popperian. If it can always be argued that difficulties encountered by the paradigm are with the ‘auxiliary’ hypotheses, then no defeat of the paradigm is possible. If no defeat is possible, when whence comes the crisis that causes the revolution? Assuming that these positions are indeed polar opposites the authors place themselves “closer to Popper’s [position] than to Kuhn’s”.

I’m not certain what their purpose in affixing a claim to Popper is, unless it is in an effort to garner the support of those who view themselves closer to Popper than to Kuhn – which would be a peculiarly Kuhnian strategy for avowed Popperians.

The many levels of paradigms

The argument that GS engage in allows for what I will call meta-paradigms, higher level abstract paradigms that subsidiary paradigms are reliant upon for the deductions that are possible within the domain to which the subsidiary paradigm extends. The authors argue that we do not engage in inductive inference with our models, but rather treat the higher level paradigms as axiomatic to the paradigms we are making use of. Specifically, the generalisations that we deduce from sampling are “deductions from the statistical properties of random samples, and the ability to actually conduct such sampling”, not knowledge that precipitates from the model itself. The naïve critique of Kuhn and Popper suggests that there is a linear sequence of universal paradigms which is held to be true by all people simultaneously. If however we move to a meta-paradigmatic model where multiple-paradigms may be used simultaneously, within a given domain, or even a particular researcher, then we move to a model that is not linear and is instead network based.

Here instead we have a system of paradigms where, independent of the constraints of a particular model, the researcher can pluck parameters from across the paradigm space which she believes may afford more explanatory power to her newly generated model. GS’s mechanism of probing the robustness of Bayesian models can be used to create a counterpoint to the likelihood hacking that can otherwise occur in a system that focusses only on goodness of fit.

In the same way as the great challenge of mathematics is to link up locally coherent domains into a unified whole (so that the theorems of one domain can be translated into other areas) so too would the task of such a meta-paradigmatic system be to create connections between otherwise divorced areas of study so that the affordances offered by the parameters and measurements of those domains may be of use in the domains closer to our areas of interest. This system has more akin to an ecology of research than a monocrop of universal paradigms. Different strains of research can exist, and cross pollenate, for as long as they are successful. Then, as technology changes the research environment (say it allows narrower ranges of measurements to be taken), the degree of success that particular models will have in describing the universe will alter. Areas of relative stability could appear in zones of Kuhnian coherence where internally the problem-solving aspects of science can continue through a GS analysis of the dynamics of falsification of particular models set within a broader meta-paradigm. Until a great event, or a series of such events cause that meta-paradigm to decohere and require researchers to stretch out into other domains in search of models which allow for consistency to be established.

Comments

Being statisticians of a philosophical bent, GS have made much use of the topological nature of statistics, and this has greatly influenced how I’ve read their text. Data can be viewed as a mere abstraction of reality, but it is also used to expose regularities in the observable universe that are not immediately apparent to our senses. GS’s dependence upon graphing data is an interesting development upon this. I think that their primary concern is the naïve researcher who repeatedly presses F5 to re-run Monte-Carlo experiments until an iteration throws up a statistically significant result, or that researcher who expects that throwing ever more data at a model will allow her to develop a meaningful understanding of the research domain.

It is, according to GS, to the parameterisation of her models that the researcher must attend. If she is not expert in the nature of her parameters and in their failings, then she is going to be able to improve upon the power of her models. GS attempt at altering the interpretation of Bayesian statistics is, I believe, both useful and necessary. It may be that the immediate audience of the British Journal of Mathematical and Statistical Psychology is one that is populated by Morley et al.’s ‘humble Bayesians’ but it is not certain that outside of that particular domain the subtleties that separate the humble from the overconfident are well understood. As the methods of Bayesian statistics are transferred further and further from their natural home a robust discussion as to what the statistics actually describe, and the technicalities of what it is that one can infer from such methods is useful.

Bibliography

Gelman, A., & Shalizi, C. R. (2013). Philosophy and the practice of Bayesian statistics. British Journal of Mathematical and Statistical Psychology, 66(1), 8-38.

Morey, Richard D., Jan‐Willem Romeijn, and Jeffrey N. Rouder. “The humble Bayesian: Model checking from a fully Bayesian perspective.” British Journal of Mathematical and Statistical Psychology 66.1 (2013): 68-75.